Governments should force AI firms to run ‘lots of safety experiments’ – Godfather of AI, Geoffrey Hinton

Governments should force AI companies to run safety experiments, insisted Geoffrey Hinton, the Godfather of AI.

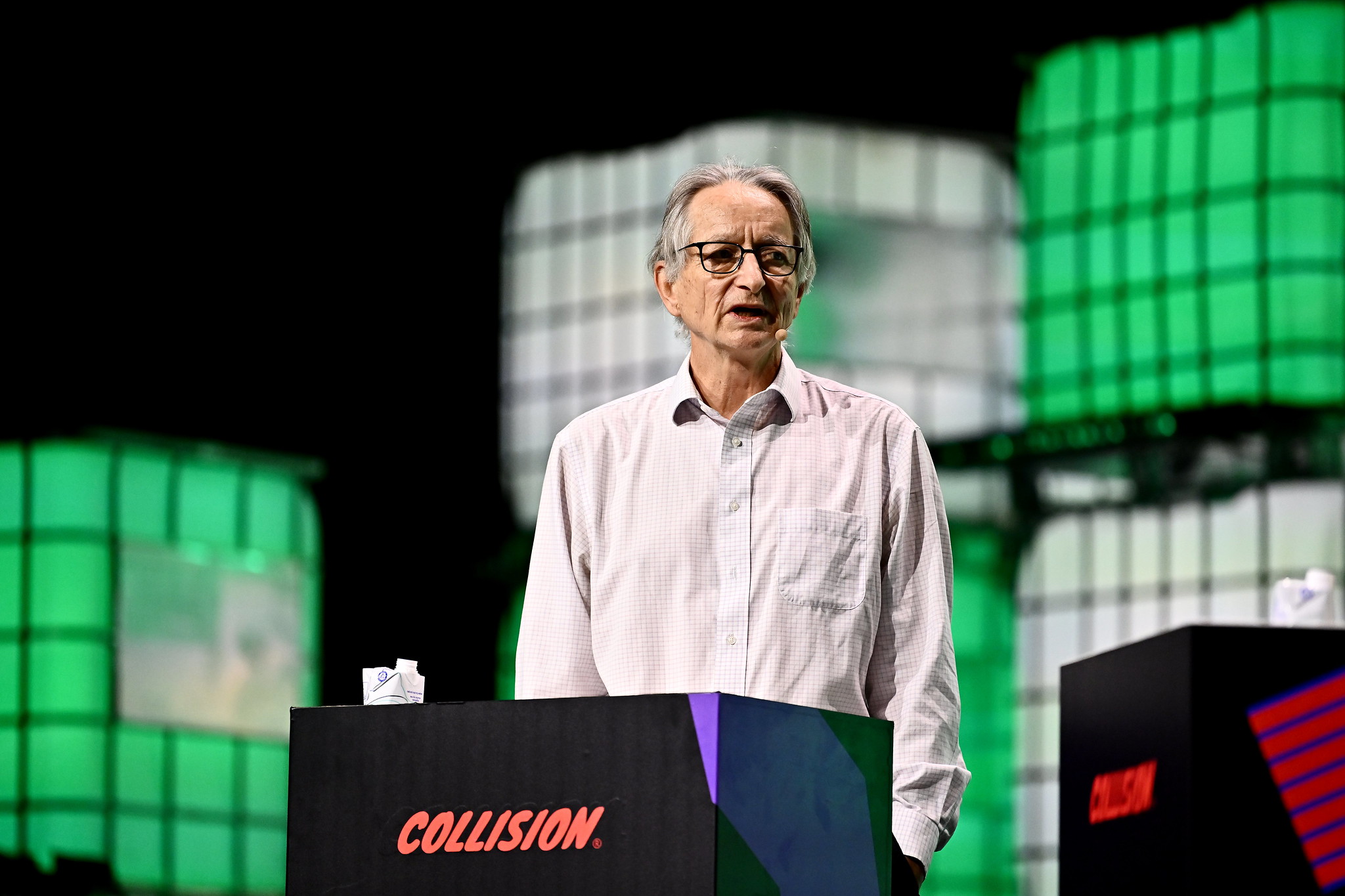

Speaking at Collision in Toronto, Geoffrey (pictured above) said that AI posed a whole variety of significant threats to humanity – not just the risk of enslavement. These threats include autonomous weapons – which will creep up and be “intent on killing you” – bioterrorism, cybercrime, fake videos corrupting elections, and surveillance by authoritarian regimes.

Regarding the existential threat posed by AI, Geoffrey said that if it gets to a point where firms have “things [that are] not quite as intelligent as us”, governments need to step in.

“The only thing powerful enough to make the big companies invest significant amounts of money in doing experiments on that … is governments. So I think governments should be involved in forcing the big AI companies to do lots of safety experiments.”

Geoffrey also proposed exposing the public to fake content in order to “inoculate” them to the tide of fake videos that could be created by AI.

“One of the dangers we didn’t talk about in detail is fake videos corrupting elections. We’re gonna see a lot of that this year. And my suggestion is we should try and inoculate the public.”

Geoffrey explained: “I was at a meeting with a bunch of billionaire philanthropists who wanted to know what they could do to help. And my suggestion was pay for a lot of advertisements, where you have a very convincing fake video. And then right at the end of it, it says, ‘this was a fake video. That was not Trump, and he never said anything like that’, or ‘that was not Biden, and he never said anything like that’. And it’s just like inoculation, you have a version of this then attenuated by saying it was a fake one, so that people can build up resistance.”

Until last year, Geoffrey worked at Google, but left the tech giant to be able to talk more freely about the dangers of unregulated AI.

The Godfather of AI said that although the existential crisis from AI is the one spoken about the most, surveillance is the most urgent one: “I think surveillance is something to worry about. So, AI is going to be very good at surveillance. It’s going to help authoritarian regimes stay in power. Now, in the US, the founders thought about this kind of thing. And they put in protections against it, like the Supreme Court.”

However, Geoffrey pointed out that the US Supreme Court was now itself open to questioning following recent controversies: “Unfortunately, the current Supreme Court isn’t a very good protection … You’ve got one guy who’s probably a sexual predator. You got another guy who takes so many bribes he can’t keep count of them. And you’ve got a third guy who’s so keen to keep Trump out of jail, that he’s willing to contemplate that the President might be allowed to shoot his political opponents and not be legally liable.”

When questioned on whether political figures are capable of addressing these problems, Geoffrey said: “It depends on which country you’re in. So in the US, it’s sort of paralyzed now by the fact that one of the parties is dedicated to a lie, right? So you can’t get much done there … in some other countries, they can do more, but it’s difficult.” Geoffrey continued, “In all of these countries that are making up regulations, the regulations always have a little clause in them that say none of these regulations apply to military uses of AI. So defense departments won’t allow governments to rule out lethal autonomous weapons.”

Geoffrey went on to clarify that this is also the case in Europe: “If you look at the European legislation, there’s an explicit clause in there that simply says none of these regulations apply to military applications of AI.”

Regarding lethal autonomy, Geoffrey said: “Just imagine something creeping up behind you, that’s intent on killing you.”

Geoffrey also spelled out the problem of alignment, where an AI system may not be clear about the appropriate approach to solving a problem. “So for example, if you give it the goal of stopping climate change, the obvious way to do that is to get rid of people, but it’ll be smart enough to realize that’s not what you meant.”

“But there’s other cases where even you may not be quite sure what you expect to happen. And also, there’s cases where different people have very different goals. So people talk about the alignment problem as if we all agree on what’s good to do and what’s not good to do! So this, for example: some people think it’s good to drop 2,000-pound bombs on children and other people don’t. They’ve both got their reasons. You can’t align with both of them.”

Geoffrey concluded that “the biggest problem is we don’t align with each other. Right? So you’re going to introduce these very intelligent things, and they’re gonna have a big problem knowing what to do.”

On leaving Google, Geoffrey explained: “I figured I could just warn that the existential threat – the threat that in the long run, these things get smarter than us and go rogue – that’s not science fiction, like Aidan Gomez thinks, that’s real.”

Geoffrey elaborated: “In order to make AI agents, you have to give them the ability to create sub-goals … Now, as soon as you give something the ability to create a sub-goal to get stuff done, it’ll realize there’s a very good sub-goal that works for almost everything, which is get more control. So if I can get more control, I can get stuff done better. So even if I’m totally benevolent, and I just want to achieve what you asked me to achieve, I’ll realise that if I get more control, it’ll be easier to do that. And actually, if these things are much smarter than us, they’ll realise, just take the control away from the people and will be able to achieve what they want much more efficiently. And that seems to me like a very slippery path. Because if you ever get an agent that gets any kind of self-interest, then it’ll say well, you know, find a few more data centres of that other super-intelligence, I’d be able to get a bit smarter, so I’d be able to reflect my interests a bit better. And then you’ll get evolution, and once you get evolution, you’ve got all this nasty competitive stuff.”

Geoffrey went on to say that “the good outcome would be that a super-intelligence just starts running everything and looks after us. And we’re kind of pets of a super-intelligence. That’s Elon Musk’s view of it. And that’s the good outcome.”

However, despite concerns, Geoffrey finished on a positive note: “There’s an equal number of wonderful things that are going to happen. Like for example, in medicine, instead of going to your family doctor, you’re gonna be able to go to a doctor who’s seen 100 million patients and knows your whole genome and the results of all your tests and the results of all your relative tests, and has seen thousands of cases of this extremely rare disease you have and could just diagnose you right. And in North America, there’s more than 200,000 people a year [that] die of poor diagnoses. Already, a doctor on difficult diagnoses gets 40 percent correct. AI gets 50 percent correct. And AI with a doctor, gets 60 percent correct. So that’s already like 50,000 people saved right there every year. So there’s going to be wonderful things, particularly in healthcare, but actually anywhere where you want to make a prediction. So there’s gonna be huge increases in productivity. The problem is in our political system to turn those huge increases in productivity into benefits for everybody. So the problem is us it’s not the machine, the problem is always us”

Geoffrey’s comments were made as part of a wider discussion on how to control AI at Collision, which is returning to Toronto for its sixth year. Global founders, CEOs, investors and members of the media have come to the city to make deals and experience North America’s thriving tech ecosystem.

More than 1,600 startups are taking part in Collision 2024 – the highest number of startups ever at a Collision event. 45 percent of these are women-founded, and startups have travelled to Toronto from countries including Nigeria, the Republic of Korea, Uruguay, Japan, Italy, Ghana, Pakistan and beyond.

In total, more than 37,800 attendees have gathered at the event, as well as 570 speakers and 1,003 members of the media, to explore business opportunities with an international audience.

739 investors are attending Collision, including Vinod Khosla, founder of Khosla Ventures; Wesley Chan, co-founder and managing partner of FPV Ventures; and Nigel Morris, co-founder and managing partner of QED Investors, as well as nine companies on the Forbes Midas List, and 12 investors from those firms.

Top speakers at Collision include:

- Geoffrey Hinton, Godfather of AI

- Maria Sharapova, entrepreneur and tennis legend

- Aidan Gomez, founder and CEO of Cohere (an AI for enterprise and large language model company, which raised US$450 million at a US$5 billion valuation in June 2024)

- Raquel Urtasun, founder and CEO of Waabi (a Canadian autonomous trucking company)

- Jeff Shiner, CEO of 1Password

- Dali Rajic, president and COO of Wiz (a cloud security platform)

- Alex Israel, co-founder and CEO of Metropolis (an AI and computer vision platform)

- Jonathan Ross, founder and CEO of Groq (an AI chip startup)

- Keily Blair, CEO of OnlyFans

- Autumn Peltier, Indigenous rights activist

About Web Summit:

Web Summit runs the world’s largest technology events, connecting people and ideas that change the world. Half a million people have attended Web Summit events – Web Summit in Europe, Web Summit Rio in South America, Collision in North America, Web Summit Qatar in the Middle East, and RISE in Asia – since the company’s beginnings as a 150-person conference in Dublin in 2009.

This year alone, Web Summit has hosted sold-out events in Qatar, which welcomed more than 15,000 attendees, and in Rio, where more than 34,000 people took part. Our events have been supported by partners including the Qatar Investment Authority, Snap, Deloitte, TikTok, Huawei, Microsoft, Shell, Palo Alto Networks, EY, Builder.ai and Qatar Airways.

Web Summit’s mission is to enable the meaningful connections that change the world. Web Summit also undertakes a range of initiatives to support diversity, equality and inclusion across the tech worlds, including Impact, women in tech, Amplify, our scholarship program, and our community partnerships.

Useful links

- Collision website: https://collisionconf.com/

- Collision media kit: https://collisionconf.com/media/media-kit

- Collision images: https://flickr.com/photos/collisionconf

- About Web Summit: about.websummit.com